Table of Contents

- Introduction

- Understanding Language Model Learning (LLM)

- The Power of Transformers in AI

- Applications of LLM and Transformers

- Training LLM Models for Optimal Performance

- Challenges and Limitations of LLM and Transformers

- The Future of LLM and Transformers

- Summary and Key Takeaways

- FAQs

Introduction

In today’s technologically advanced world, AI (Artificial Intelligence) has become an integral part of various industries. It empowers machines with the ability to learn, reason, and make decisions, greatly impacting our lives. One fascinating aspect of AI is the development of Language Model Learning (LLM) and Transformers. In this article, we will delve into the world of LLM and Transformers, exploring their definition, historical evolution, and significance in the field of AI.

Understanding Language Model Learning (LLM)

Definition and Purpose of LLM

Language Model Learning (LLM) refers to the process of training machines to understand and generate human language. The purpose of LLM is to enable machines to comprehend and produce natural language text or speech, allowing for more effective communication between humans and machines.

Historical Evolution of LLM

Over the years, LLM has evolved significantly. Early approaches to LLM involved manually defining linguistic rules, which proved to be limited in their ability to capture the complexity and diversity of human language. However, with the advent of machine learning algorithms, LLM entered a new era.

Different Types of LLM Approaches

- Supervised Learning:

- In supervised learning, machines learn from labeled datasets, where human experts provide the correct outputs for given inputs. This approach is commonly used for tasks like text classification and named entity recognition.

- Unsupervised Learning:

- Unlike supervised learning, unsupervised LLM does not rely on labeled data. Instead, it seeks to find patterns and structures within the data without any prior knowledge. Techniques like clustering and dimensionality reduction are used in this approach.

- Reinforcement Learning:

- Reinforcement learning utilizes a reward-based system, where machines learn through trial and error. They perform actions and receive feedback in the form of rewards or penalties, enabling them to optimize their behavior autonomously.

The Power of Transformers in AI

Transformers, a groundbreaking innovation in the field of AI, have revolutionized Natural Language Processing (NLP) with their exceptional capabilities.

Introduction to Transformers

Transformers are neural network architectures that excel at handling sequential data, such as sentences or speech. Unlike their predecessors, which relied on recurrent neural networks, Transformers adopt a different approach, fueling their success in NLP tasks.

How Transformers Revolutionized NLP

Transformers introduced a novel mechanism called the self-attention mechanism, enabling them to capture the relationships and dependencies between different words or tokens in a sentence. This mechanism allows Transformers to process information in parallel, making them more efficient and accurate in understanding and generating natural language.

Key Features of Transformers

- Self-Attention Mechanism:

- The self-attention mechanism allows Transformers to weigh the importance of each word or token in a sequence based on its relevance to other words. This mechanism helps capture contextual information effectively.

- Encoder-Decoder Architecture:

- Transformers employ an encoder-decoder architecture, where the encoder learns to represent the input sequence, while the decoder generates the output sequence. This architecture proves beneficial in tasks like machine translation and text summarization.

- Contextual Embeddings:

- Contextual embeddings, another key feature of Transformers, represent words or tokens in a way that considers their context within the sentence. This context-awareness enhances the quality of language understanding and generation.

Applications of LLM and Transformers

The power of LLM and Transformers extends to various applications, changing the landscape of AI and improving human-machine interaction.

LLM and Transformers in Machine Translation

LLM and Transformers have advanced the field of machine translation, enabling more accurate and fluent translations between different languages. By learning the patterns and structures of multilingual text data, LLM models can provide high-quality translations for a wide range of languages.

Natural Language Understanding with LLM and Transformers

LLM models, fueled by Transformers, excel in natural language understanding tasks. They can accurately comprehend the meaning, context, and sentiment of human language, making them invaluable in fields like sentiment analysis, question answering systems, and chatbots.

Speech Recognition and Synthesis using Transformers

Transformers have also played a significant role in speech recognition and synthesis. By leveraging contextual embeddings and self-attention mechanisms, Transformers can transcribe spoken words with exceptional accuracy and generate lifelike synthesized speech, contributing to advancements in voice-based technologies.

Sentiment Analysis and Text Classification with LLM

LLM models, in conjunction with Transformers, facilitate sentiment analysis and text classification tasks. By understanding the sentiment behind textual data, LLM models can identify positive, negative, or neutral sentiments, allowing businesses to gain valuable insights from customer feedback and reviews.

LLM and Transformers in Chatbots and Virtual Assistants

Chatbots and virtual assistants have become increasingly intelligent and conversational due to LLM and Transformers. By leveraging these technologies, chatbots can understand user queries more accurately and provide relevant responses, enhancing user experience and efficiency.

Training LLM Models for Optimal Performance

Achieving optimal performance with LLM models involves careful training and fine-tuning. Several key steps contribute to the success of LLM models:

Data Collection and Preprocessing

Collecting diverse and high-quality datasets is crucial for training LLM models. Data preprocessing techniques, such as tokenization, stemming, and removing stop words, are applied to ensure data cleanliness and model effectiveness.

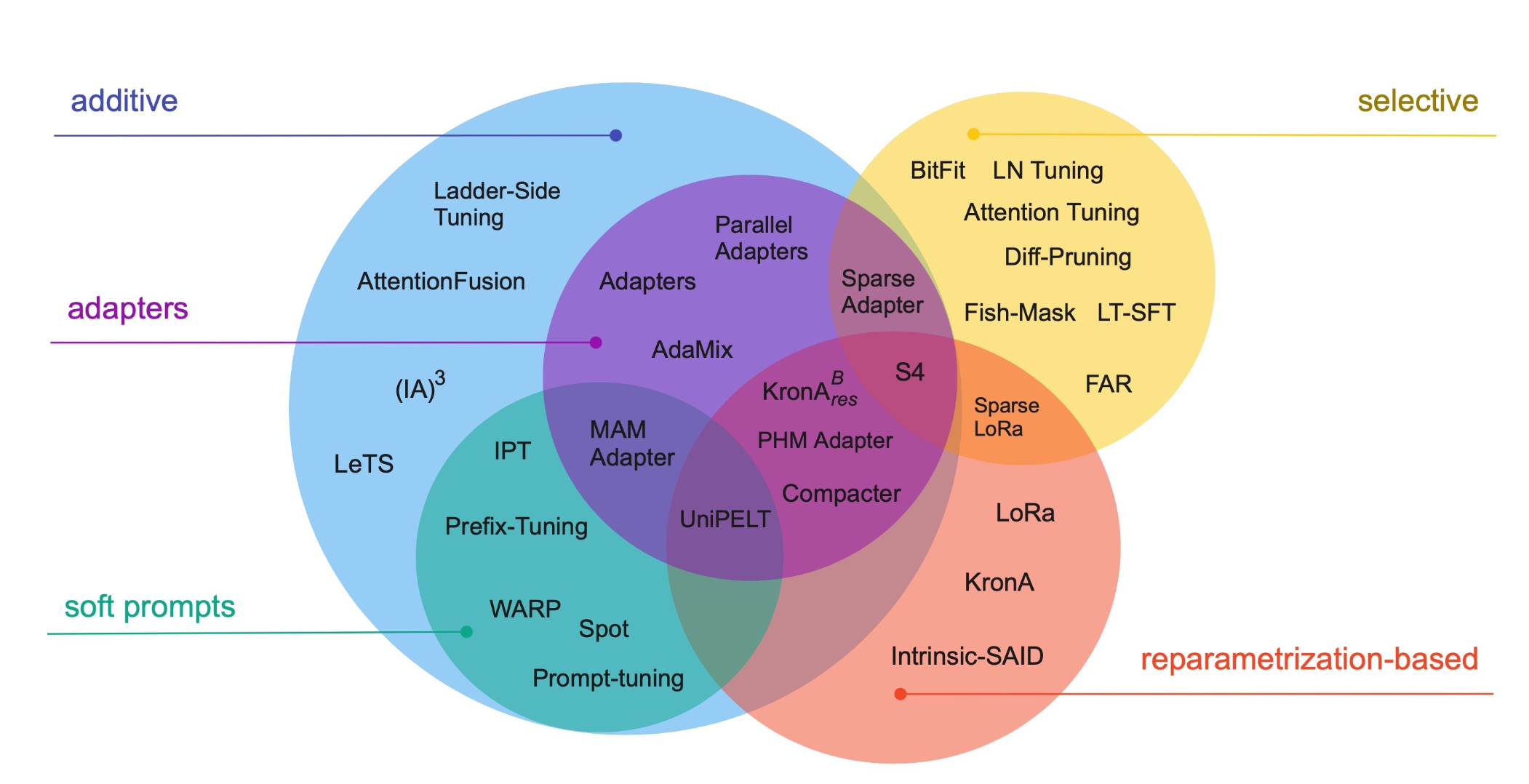

Selecting Appropriate LLM Architectures

Various LLM architectures, including GPT (Generative Pre-trained Transformer), BERT (Bidirectional Encoder Representations from Transformers), and T5 (Text-to-Text Transfer Transformer), offer different strengths for different tasks. Selecting the most suitable architecture for a specific application is essential for achieving optimal performance.

Fine-tuning and Hyperparameter Tuning for Desired Results

After pre-training the LLM models on large-scale datasets, fine-tuning is necessary to adapt them to specific tasks. Hyperparameter tuning, including adjusting learning rates and batch sizes, further refines the model’s performance for improved results.

Strategies to Improve LLM Model Performance

To enhance LLM model performance, techniques such as transfer learning, ensemble methods, and regularization can be employed. These strategies help reduce overfitting, enhance generalization, and improve overall model accuracy.

Challenges and Limitations of LLM and Transformers

While LLM and Transformers have made significant advancements in AI, they are not without challenges and limitations.

Bias and Ethical Concerns in LLM Models

LLM models trained on biased data can inherit and perpetuate societal biases, leading to discriminatory outputs. Addressing this issue requires careful selection of training data, diverse perspectives, and continuous monitoring to ensure fairness and inclusivity.

Scaling Issues with Large LLM Models

The increasing size and complexity of LLM models bring scalability challenges. Training large-scale models requires significant computational resources, limiting their accessibility and applicability for smaller organizations or resource-constrained environments.

Domain-Specific Limitations of Transformers

Transformers, while versatile, perform better in some domains than others. Models trained on specific corpora may struggle to generalize well beyond the domains they were trained on. Careful consideration of domain-specific requirements is crucial for achieving optimal results.

The Future of LLM and Transformers

Ongoing research and advancements in LLM and Transformers are shaping the future of AI and expanding their potential applications.

Ongoing Research and Advancements

Researchers continue to explore new techniques and architectures to further improve LLM models and Transformers. Ongoing research focuses on overcoming existing limitations, reducing biases, and optimizing resource utilization for broader adoption.

Potential Applications in Various Industries

The potential applications of LLM and Transformers extend across industries such as healthcare, finance, customer service, education, and entertainment. These technologies can enhance medical diagnosis, automate financial analysis, improve customer interactions, personalize learning experiences, and create more immersive storytelling.

Predictions for the Future of LLM and Transformers

The future of LLM and Transformers holds tremendous promise. It is anticipated that LLM models will become more interpretable, explainable, and capable of reasoning. Additionally, advancements in hardware and computing infrastructure will enable the training and deployment of even larger and more sophisticated LLM models.

Summary and Key Takeaways

In this article, we explored the captivating world of LLM and Transformers. We discussed the significance of AI in today’s world and introduced the concepts of LLM and Transformers. We looked at the historical evolution of LLM, the power of Transformers in revolutionizing NLP, their key features, and various applications they enable. Additionally, we examined the process of training LLM models for optimal performance and discussed the challenges and limitations that come with these technologies. Furthermore, we glimpsed into the future of LLM and Transformers, emphasizing their potential impact across industries. As AI continues to progress, LLM and Transformers remain at the forefront, reshaping how we interact with machines and advancing the field of natural language understanding.

FAQs

What are the main advantages of using LLM and Transformers?

LLM and Transformers offer several advantages, including improved natural language understanding, enhanced machine translation, accurate sentiment analysis, and more conversational chatbots. They revolutionize NLP by capturing contextual information effectively and improving language generation capabilities.

How do LLM and Transformers improve natural language understanding?

By leveraging the power of the self-attention mechanism and contextual embeddings, LLM and Transformers excel at capturing the relationships and nuances within natural language. They enable machines to understand context, sentiment, and meaning, leading to improved natural language understanding.

Can LLM models be biased? How can this be addressed?

LLM models can inherit biases from the data they are trained on, potentially leading to biased outputs. Addressing this issue requires rigorous data selection, diverse training datasets, and ongoing monitoring to mitigate bias and ensure fairness in model outputs.

Are there limitations to the size of LLM models?

The increasing size of LLM models presents scalability challenges, requiring significant computational resources for training. Limited access to high-performance hardware and the associated costs may pose limitations on the size and deployment of LLM models in certain environments.

Follow us on our Instagram – @squarebox.in

Read more interesting articles and blog by clicking here.